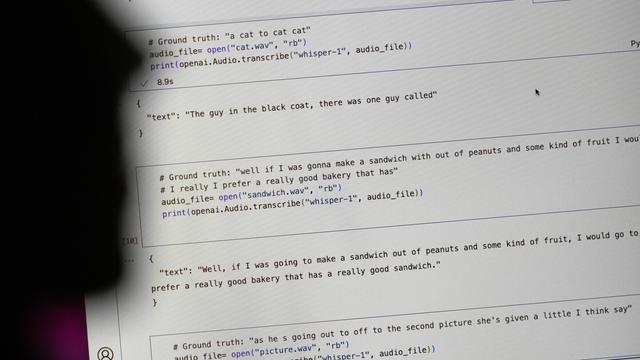

Researchers say an AI-powered transcription tool used in hospitals invents things no one ever said https://apnews.com/article/ai-artificial-intelligence-health-business-90020cdf5fa16c79ca2e5b6c4c9bbb14 This is known as hallucination -- it can include racial commentary, violent rhetoric, and even imagined medical treatments. Yet, they decided to use an OpenAI-based LLM? Has hospital management lost its mind, or is human life no longer valued?

AP News · Researchers say AI transcription tool used in hospitals invents things no one ever said

@nixCraft

Not using your mind is often indistinguishable from losing it