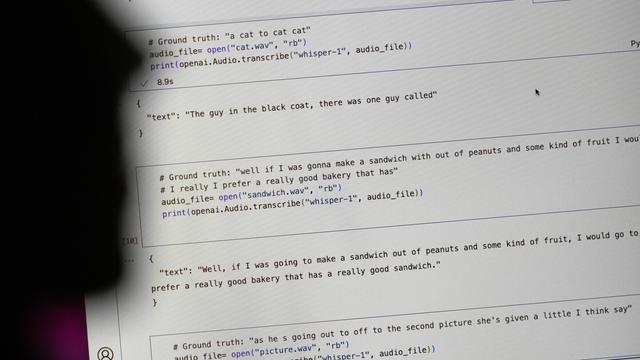

Researchers say an AI-powered transcription tool used in hospitals invents things no one ever said https://apnews.com/article/ai-artificial-intelligence-health-business-90020cdf5fa16c79ca2e5b6c4c9bbb14 This is known as hallucination -- it can include racial commentary, violent rhetoric, and even imagined medical treatments. Yet, they decided to use an OpenAI-based LLM? Has hospital management lost its mind, or is human life no longer valued?

Boeing hid a significant problem with the software in their 737 MAX airplanes that resulted in two airplane crashes, killing over 350 people. Boeing faced financial penalties, but no Boeing executives were criminally charged with these issues. They know they can get away by paying money. As long as this option exists, CEOs and executives will keep cutting corners, and innocent humans will die. It is the same thing with OpenAI. Sama knows he will not go to jail if someone dies.

@nixCraft That’s hopefully changing when software vendor liability kicks in in 2026 in the EU.