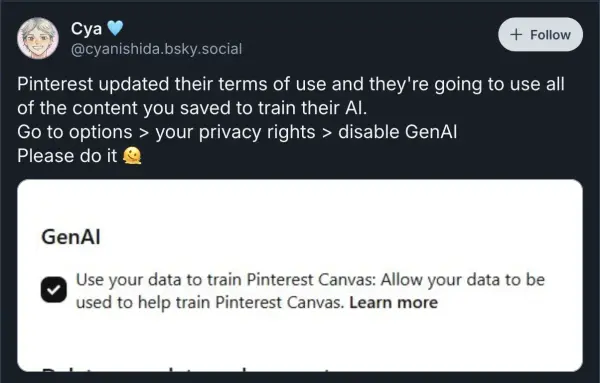

Pinterest have announced that they intend to use all pinned content for training ML (aka "AI”) models, opting it all in without the approval of the original creators.

In the past, it was apparently easy to find all Pins on Pinterest from a particular source. It's probably no coincidence that this feature has now disappeared, making it impossible for content owners to identify their intellectual property without resorting, as @camilabrun has done, to custom scripts.

I’m in something of a cleft stick, as I license my photographs and art under Creative Commons licenses. I like the idea of human beings being able to use what I make (subject to some small restrictions). I'm less thrilled by the idea that some BigTech company can ingest everything I've ever made so they can fill the world with worthless slop based on digested versions of my work and everyone else’s.

2/

Unfortunately, there doesn't seem to be a variant of the CC license that says “Free to use … but not for this." I use BY-NC licenses that require attribution & prohibit commercial use. An AI image generator is never going to give attribution, and most are made and sold by commercial companies ... but Creative Commons themselves point out that training for generative AI may be covered by fair use.

3/

https://creativecommons.org/2023/08/18/understanding-cc-licenses-and-generative-ai/

The problem is that training a model is (other things being equal) value-neutral. It's USING a model where the ethical issues arise & where "unfair use" starts creeping in.

If someone uses a model trained on things I create to advance science or medicine, I'm all for it; if they use it to generate slop, deprive other creators of a livelihood, or power surveillance engines, I'm not. But there's no way to express my preferences.

4/

An AI-generated work based on a model could violate many of the tests for fair use (purpose & character, amount & substantiality, effect on market or value). But it's unclear whether "fair use” considerations apply once the model has been trained; it's almost as if model training (a "fair use”) acts as a firewall to protect the makers and users of generative tools (often an “unfair” one) from consequences. It's a transitivity problem.

5/

There's also a 'remix effect'. Like cryptocurrency mixers that blur the origins of funds by scrambling transactions together, generative tools blend inputs from multiple different sources. Even if a generator spits out a superficially close copy of someone's original work, the user or maker can say “Ah, no, this was the product of all these OTHER works too," & claim to be using a non-substantial part of the original.

6/

TL;DR: "Fair use" is good and valuable, & model training should be protected by it.

But if a company like Pinterest can decide to use the work of others for free for its own profit, or the makers or users of a generative tool can use the "fair use” of model training to authorize decidedly unfair use cases, then something has gone wrong & we need better legal (and ethical) frameworks to address this.

/END