What is something you learned in math that made you loose a sense of innocence-- knowledge that you can never unlearn that changes the world forever?

For me? That rational numbers are outnumbered by irrational numbers-- to such an extreme degree they are functionally as sparse as the integers-- or even the powers of ten.

@futurebird philosophy of maths undergraduate course where I left furious & depressed at the same time.

Main gist of that lecture was, “ everything you know, been taught & ever learned, is just plain wrong, in error & it is ,at best, an approximation, with integrals between human perception and actual full reality.

Furious - so I’ve spent my whole life in school up to this point learning what exactly?

Depressed - This is shite! How on earth, stars & universe am I gonna figure things out?

@dahukanna @futurebird I have a chemistry degree, and basically every year, they’d do an intro lecture saying that same thing:

Chem 101: “here’s how stuff basically works”

Organic chemistry: “yeah, everything we said about bonds and atoms was a lie. “

Quantum chemistry: “hah! You believed that crap? None of that stuff even exists! At best it’s a probability distribution.”

It was harrowing, but also freeing and a lot of fun.

I wish programming was taught the same way.

@lkanies @futurebird I am that 79% of people with chemistry degrees who end up doing something else and no, I’m not an accountant !

@dahukanna @futurebird heh, same. I became a SysAdmin, then programmer, then software entrepreneur.

I’m a huge fan of basic science training, for most any career

@lkanies @dahukanna @futurebird

While a lot of programming courses are pipelines for employment, when I did my CS graduate work, I had similar experiences. "Compiler Design" was the single hardest course I ever took. Made me realize most of my earlier classes were basically "you tell the magic box to do it, and it just works if you did it right".

@GregNilsen @dahukanna @futurebird god I would have loved that class. I never took a CS course, but taught myself basic compiler stuff on my own time, mostly from the dragon book.

@GregNilsen @lkanies @dahukanna @futurebird It's turtles all the way down mate.

You think a computer is digital electronics? There's no such thing, there's some nasty real world analogue electronics to make the digital "magic box" work. And a few layers down, a whole bunch of quantum stuff ...

@TimWardCam @lkanies @dahukanna @futurebird

Absolutely...once you get into the "Why do we use binary and not Base10?" realm, suddenly a lot of other disciplines get involved.

@lkanies @dahukanna @futurebird I do not. I hate this "everything/some-things-we-are-not-going-to-specify was incorrect. How am I supposed to reason then? Engineering was taught that way, and I hated it.

@_ZSt4move @dahukanna @futurebird eh. There’s no way in hell I could have understood quantum chemistry without all that background. Most people could barely pass o-chem after a year of 101.

The only real choice was whether to admit that previous years were over-simplistic models, or to act like there was no cognitive dissonance.

But, obviously, it does not work for everyone.

@lkanies @dahukanna @futurebird I have no clue about chemistry, unfortunately. And I am not saying "start with the most complicated case." That does not work in some cases. But list preconditions for the simpler cases, so that specilisations do not come out of the blue, and that students can see and end/other points that require refinement.

@_ZSt4move @lkanies @dahukanna @futurebird Same. That's why I went into physics - I kept asking questions of my engineering professors, and one finally said "If you want to know why that formula works, go talk to the Physics department."

So I did, and switched majors.

@nrohluap @_ZSt4move @dahukanna @futurebird a win for all! :)

@lkanies @dahukanna @futurebird nuclear physics was like that - we have 3 or 4 different incompatible models that solve a subset, you will learn to spot which one to use based on the phrasing of the exam question

@lkanies @dahukanna @futurebird now you've got me seriously contemplating if programming should be taught this way, or even if it already is but we just don't categorize it as well...

*Multiple times* in my life I've taken assembly language programming and introduction to object oriented programming in the same year, if not simultaneously. Which definitely feels like two ends of the spectrum there.

On the other hand, consider that saying about biology being applied chemistry which is applied physics etc...and often we learn all of those at once, ultimately approaching the same topics from multiple different directions. If you divide "programming" into things like "UX engineering", "application development", "systems design" and "computer engineering" then maybe we *do* teach it the same way...?

@admin I don’t have a CS degree, but… seems to me that most of that stuff is still not really taught.

Programming is still mostly practice, not science. (And I think it fundamentally is not a science.)

But I do like the idea of there being enough thought there that every year starts with slaying students’ beliefs about it.

The big belief that needs to die is that programming is about the computer, when it’s almost entirely about the users and the coworker

@lkanies @admin CS is fundamentally the study of problems, whether their solutions are computable at all, and if so what the properties of such solutions have.

I've talked to masters students from many different countries and unis. Some teach only the math and no programming, others no math but only programming. Most teach both.

It's a mistake to think CS is about programming. It'd be like thinking physics is about idk building pendulums

@OliverUv @lkanies Yeah the whole terminology around that needs to be cleaned up a bit IMO. At my uni I would say CS was definitely about programming. That's why it was considered "engineering" rather than "science".

I often find myself comparing CS to law or medicine, in that you deal with complex systems, often with incomplete information...you write a set of rules the machine must obey with sometimes unintended consequences...and of course with the idea of having "specialties" like I mentioned in my other comment...but you don't become a lawyer or a doctor after four years of school, yet many professional programmers don't even have that...and we wonder why the quality of software that we deal with every day is often so terrible. Might not matter much if you're designing a game, but when you're handling healthcare data or security systems or cars?? Maybe we at least should make sure *those* programmers didn't learn by just copy/paste-ing from Stack Overflow...? Lol

@admin @lkanies idk, I've seen wildly varying skill levels from both juniors and seniors (of mine). Whether they studied comp sci, comp eng, or were self taught didn't seem to have any strong correlation with the quality of their craft.

Full disclosure I studied a (non-engineering) comp sci bachelor's only. Acted as lead/top dev for a company that grew from ~15 to ~2k people in 4.5 years. Trained a lot of juniors, who then went on to train even more juniors.

@OliverUv @lkanies True...and i mean I would never say that education guarantees any particular level of skill...but it should at least guarantee exposure to the important concepts. Like understanding that SQL injection is a thing for example. You don't always learn that just googling how to write SQL statements.

@lkanies Yeah, I think part of the issue is we don't quite have the specialties worked out yet. I definitely learned a lot about security, systems design, compilers, even a bit of CPU architecture...never touched UX though. Pretty sure there were classes on that, I just didn't choose to take those ones. In the work environment you certainly have UX experts and OS experts and security experts...but most schools don't really have specific tracks for those so you can end up with a weird mix of classes that has no relation to what you end up doing if you haven't figured out what you ultimately want to be doing yet.

The other side of that also is the self-education. Which really makes the education environment a bit weird. Some students entering freshman year have trouble figuring out how to turn on the computer (true story), others have been programming since elementary school. And they shove both kinds of students into the same classes. I don't think that happens to the same degree in other fields. I have taken "introduction to object oriented programming" FOUR FUCKIN TIMES because everyone says "you can't skip that one" and instead maybe I could have taken a course on UX design because I suck at that lol

And yeah a lot of people are self-taught entirely but in that case the question of "how should we teach this" seems a bit less applicable? I mean I guess it's still a bit useful to consider for people creating online tutorials and such but mostly the stuff I learned myself was through seeking out specific topics, which makes it harder to learn the things you aren't looking for...

@lkanies @dahukanna @futurebird Is it not? We do the lies-to-children thing here, too. We start by saying you can do operations and tests on data structures.

if ( year - age = 1984) then ...

Then we tell you that there's only bits and the only test is zero or not-zero

add 39

add 1984

sub 2023

branch if zero

Then we go into the analog domain where 0 and 1 are tiny voltage differences and it starts feeling more like luck than science.

@lkanies @dahukanna @futurebird I always found that to be comforting. Sure, the way you get told things in earlier courses are technically wrong but it works for the purpose it was needed for and trying to learn the quantum model is going to be pointless if you don't have to go to that level

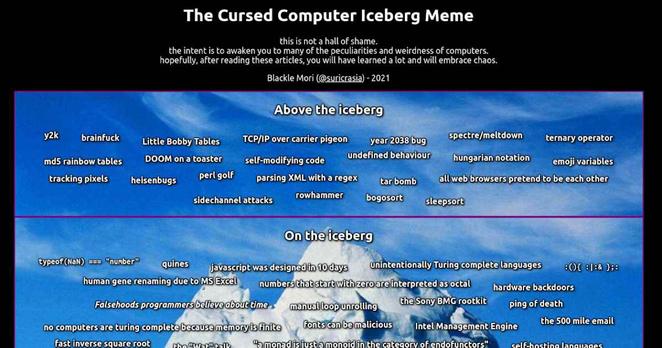

@lkanies @dahukanna @futurebird Relatedly, the Cursed Computing Iceberg: https://suricrasia.online/iceberg/

@jwz @dahukanna @futurebird thanks! I had not seen that one

@lkanies @dahukanna @futurebird My CS degree was taught kind of like this, but more like “remember that thing we treated like a black box and assumed always works? yeah here’s how it works under the hood and 500 of the ways it can fail”

Made me terrified to allocate memory, 100/10 would learn again

@joshfreedman @lkanies @dahukanna @futurebird Here's an interesting essay (and site, despite the philosophical name) that digs into how and why science and engineering are taught like that -- as if things just always work:

"...At the undergraduate level, you are mainly shielded from the failures, and systems get presented as though they were Absolute Truth. Or, at least, they are taught as though Absolute Truth lurks somewhere in the vicinity, obscured only by complex details. Recognizing that there is no Absolute Truth anywhere is a small downpayment on the price of entry to meta-systematicity."

@joshfreedman @lkanies @dahukanna @futurebird Oh, another nice quote from that essay: "Rationality expects failures due to known unknowns: parameter uncertainty, incomplete information of determinate types, and insufficient computational power, for instance. These sorts of failures can be planned for, and mitigated by adjustments within the system.

Systems don’t expect, and can’t cope with, unknown unknowns. For example: relevant common-sense observations can’t be made to fit into the model because its vocabulary doesn’t make the necessary distinctions; a sensible rule is misinterpreted in a specific case; significant aspects of the circumstances are unexpectedly not accounted for by the model at all, so it’s not even wrong, but entirely inapplicable; the system’s recommended course of action is infeasible, ignored, or obstructed, and the next-best option is outside its scope."

@invisv @joshfreedman @lkanies @dahukanna @futurebird This is related to the concept of "didactic reduction".

@lkanies @dahukanna @futurebird A whole lot of things should be taught that way. But be explicit up front for the people who bail after the first week. :-)

@lkanies @dahukanna @futurebird i think the copenhagen interpretation would go a long way to explaining a lot of software development.

@ghorwood @dahukanna @futurebird hah! That would destroy most programmers’ minds!

(Thanks, I’d never heard of this before.)

@lkanies @ghorwood @futurebird neither had (edit) I and yes, mental model, brain breaking!

@lkanies @dahukanna @futurebird i know people who somehow managed to get through a physics degree without getting this in their heads

@dahukanna @futurebird This "everything you learned is wrong" garbage is used to grab attention, sell books, and promote #clickbait. The truth is math and the sciences provide useful tools for solving problems.

@dahukanna @futurebird I would say it a little differently - "Everything you have learned so far is a special case of this wider class of mathematical objects that open new and wondrous doors!" The one fault I would take with undergrad abstract algebra and real analysis courses are that they lose sight of the practical too quickly and end up in vocabulary and definitions.

@dahukanna @futurebird So instead of "here's the definition of a field, here are classes of finite fields. Here are some operations you can use to show that two groups/fields are homomorphic", I'd start with a puzzle that's hard to solve without a new tool, then break out the new tool to solve the problem.

@dahukanna @futurebird When you get to "Everything (in analysis) is a vector space! It's groups (in algebra) all the way down!" but you're so divorced from what you learned in secondary school, it can be intimidating.

@dahukanna @futurebird But the lecturer was just explaining the concept of education being "lies-to-children". Which it is.

Maybe Stewart and Cohen explain it in a more friendly way?

@dahukanna @futurebird

The way you use the word approximation makes me think of software abstraction.

To answer your questions, you've spent time learning how to approximate something correctly. Another way to say the same thing is forming a good enough abstraction. Many of those abstractions are used daily in our lives and even other people's lives may depend on them. This also answers the second question. By approximation!

@thgs @futurebird but it was taught as “absolute” fact, not an “abstraction”.

I know they both start with the letter “a” but could not be more different -

It's a matter of perspective, I think.

Client code that calls an abstraction looks at the abstraction and everything about it is "absolute". There's a public interface to it, client code can depend on its "absoluteness".

Same thing happens when people teach other people an approximation. Presented as "absolute" in order to make sense and maintain the level of precision the approximation has.

A clandestine sense of absolute, only under specific perspectives.